Runtime error when attempting to use data distributed parallel · Issue #19 · lucidrains/reformer-pytorch · GitHub

Reformer for Translation task. Assertion Error: Sequence length needs to be divisible by bucket size*2 · Issue #72 · lucidrains/reformer-pytorch · GitHub

Reproducibility Challenge 2020 - fastai folks interested - #39 by stefan-ai - Deep Learning - fast.ai Course Forums

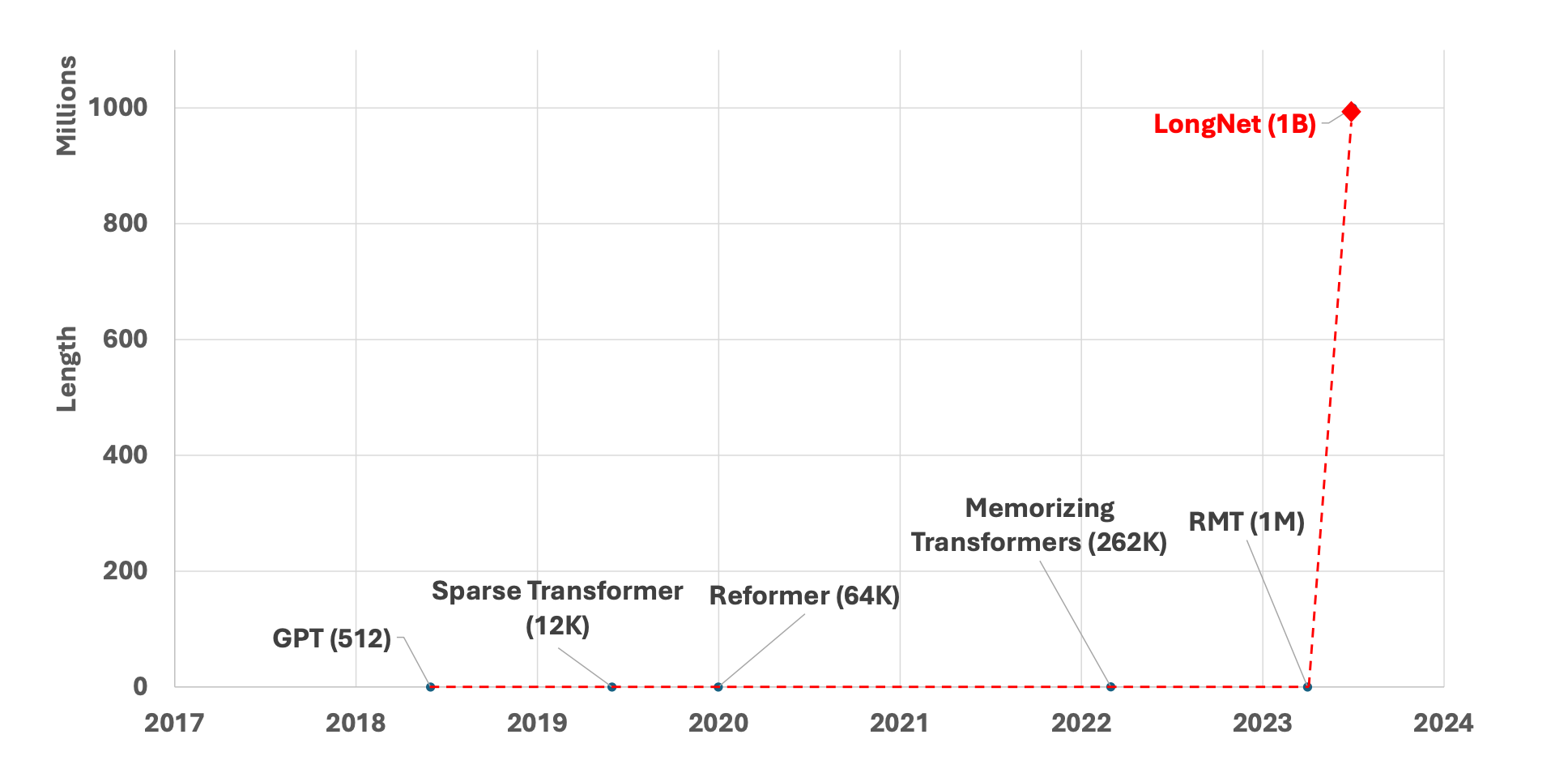

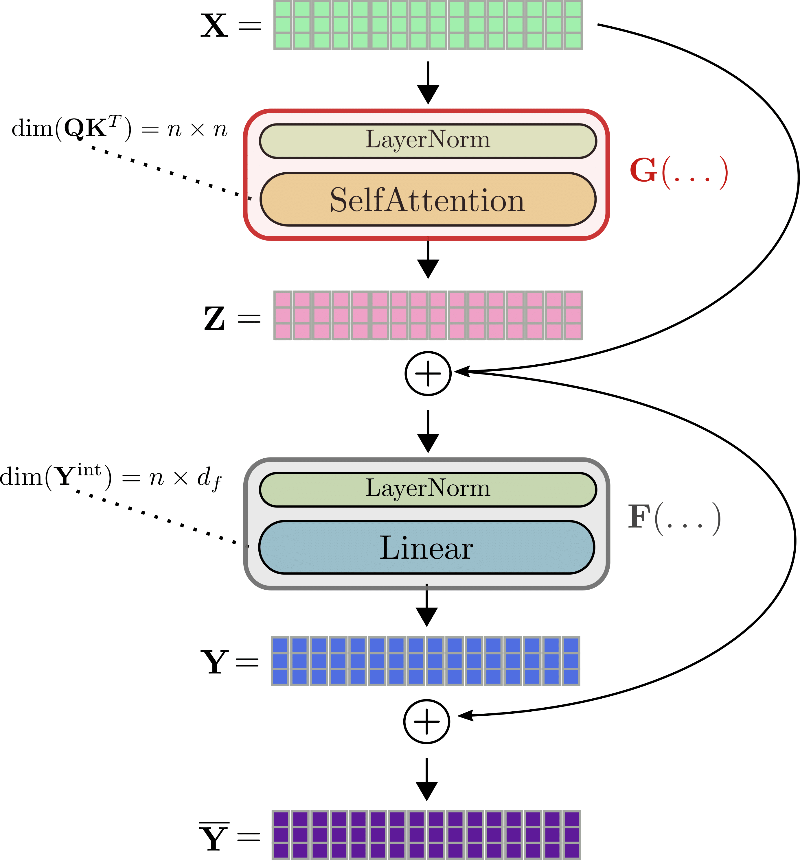

💡Illustrating the Reformer. 🚊 ️ The efficient Transformer | by Alireza Dirafzoon | Towards Data Science

💡Illustrating the Reformer. 🚊 ️ The efficient Transformer | by Alireza Dirafzoon | Towards Data Science

💡Illustrating the Reformer. 🚊 ️ The efficient Transformer | by Alireza Dirafzoon | Towards Data Science

💡Illustrating the Reformer. 🚊 ️ The efficient Transformer | by Alireza Dirafzoon | Towards Data Science

![PDF] FlashAttention: Fast and Memory-Efficient Exact Attention with IO-Awareness | Semantic Scholar PDF] FlashAttention: Fast and Memory-Efficient Exact Attention with IO-Awareness | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/87c5b281fa43e6f27191b20a8dd694eda1126336/31-Table9-1.png)